Voice Commands Unit Testing

Introduction

Voice Commands Unit Testing is a crucial process for validating the reliability of voice recognition systems in real-world scenarios. At its core, this approach involves batch-comparing audio file transcriptions against the recognized commands defined by your voice commands grammar.

Ensuring Accuracy in Real-World Environments

The objective is clear: to verify that your Voice Commands model can accurately interpret user or employee inputs, even under conditions that mimic production environments. This ensures that the system performs as expected when deployed, minimizing errors and maximizing efficiency.

The key to effective Voice Commands Unit Testing lies in the quality and relevance of the audio data used. You’re not just testing with any audio—you’re using production-quality recordings that reflect the actual conditions in which the system will operate.

This means using the same microphone setups, capturing specific voice accents, and even simulating noisy environments where commands may be issued. By doing so, you create a robust testing framework that accounts for variables like background noise, speech patterns, and hardware differences, all of which can impact the accuracy of voice recognition.

Beyond technical validation, this method bridges the gap between controlled lab testing and the unpredictable nature of live environments, ultimately leading to a more seamless and trustworthy development process.

How to proceed with unit testing?

Access unit tests through the dedicated button in the Voice Commands widget. note that you must have access to the Voice Commands technology to view this page.

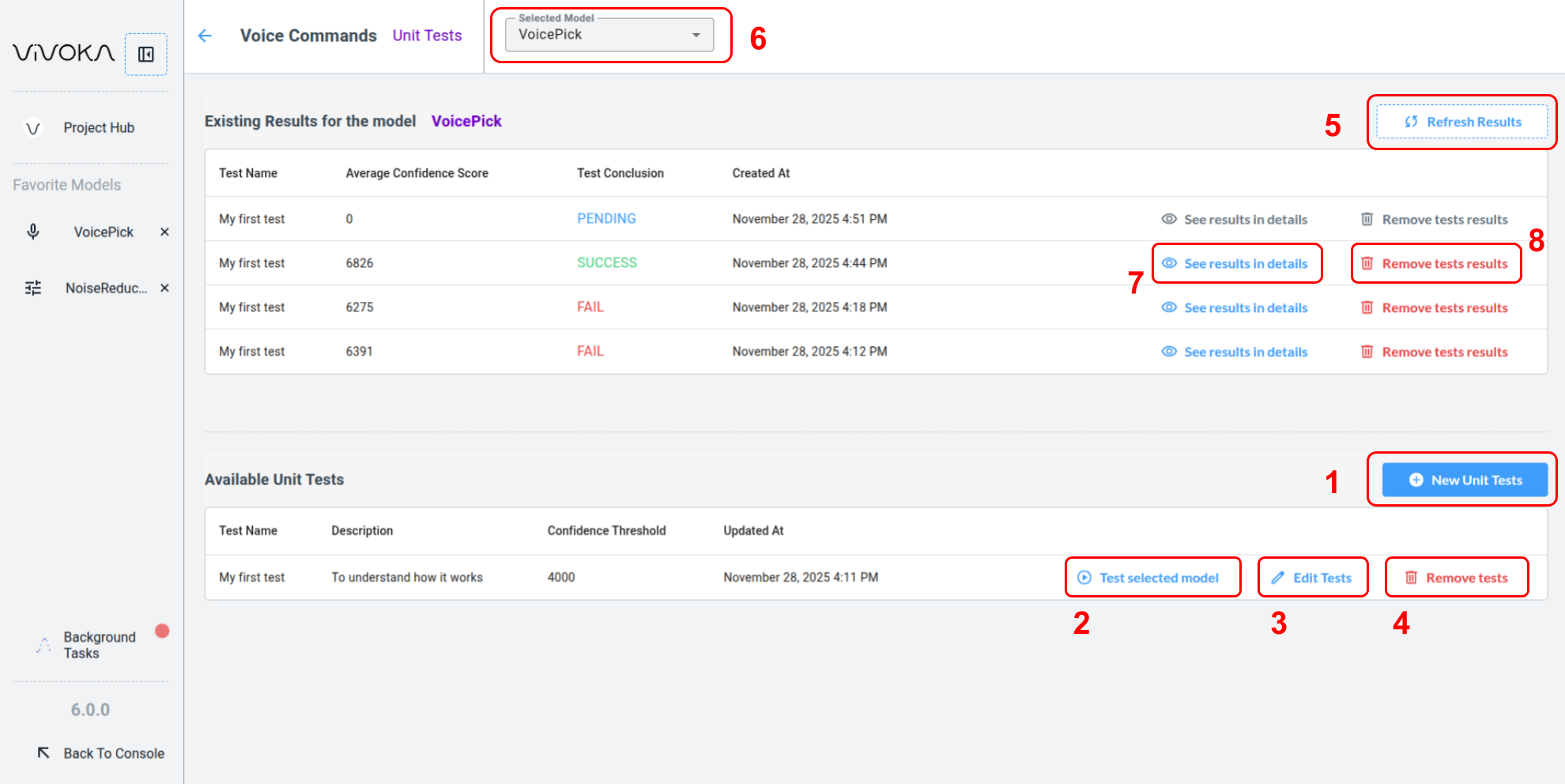

Dashboard for Unit Testing Voice Commands models

You can find the New Unit Tests button (1) to start creating a Unit Test.

Test the Selected Model (6) using the Test selected model button (2). This creates a pending process and a Background Task. See the Background Tasks documentation for details.

The Edit Tests button (3) allows you to fix transcriptions, add or remove tests, or adjust the confidence threshold. You can Remove Tests (4) without affecting result history. Test results remain independent of the Unit Tests used.

Click Refresh Results (5) once the task is complete to display the Test Conclusion and enable the See results in details button (7), even if the test failed. Test failures are valuable feedback, and guidance on interpreting them is provided in the second part of this documentation: Understand Results.

You can Remove tests results (8) from the history if desired. This action does not affect your tests, models, or anyother project data.

Understand Results

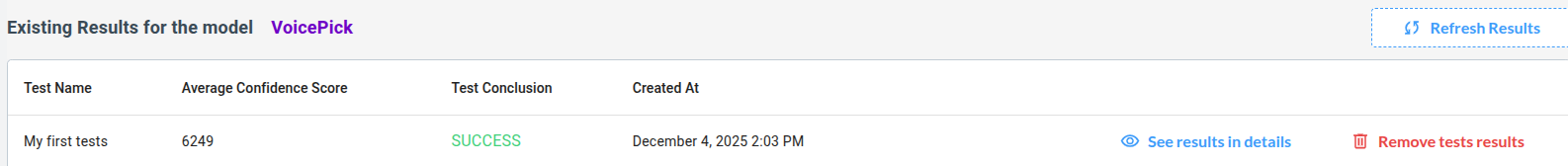

When a Test leaves the pending state, it becomes either a success or a failure. A success means all Unit Tests inside it pass. If any Unit Test fails, the entire Test fails.

Our test passed !

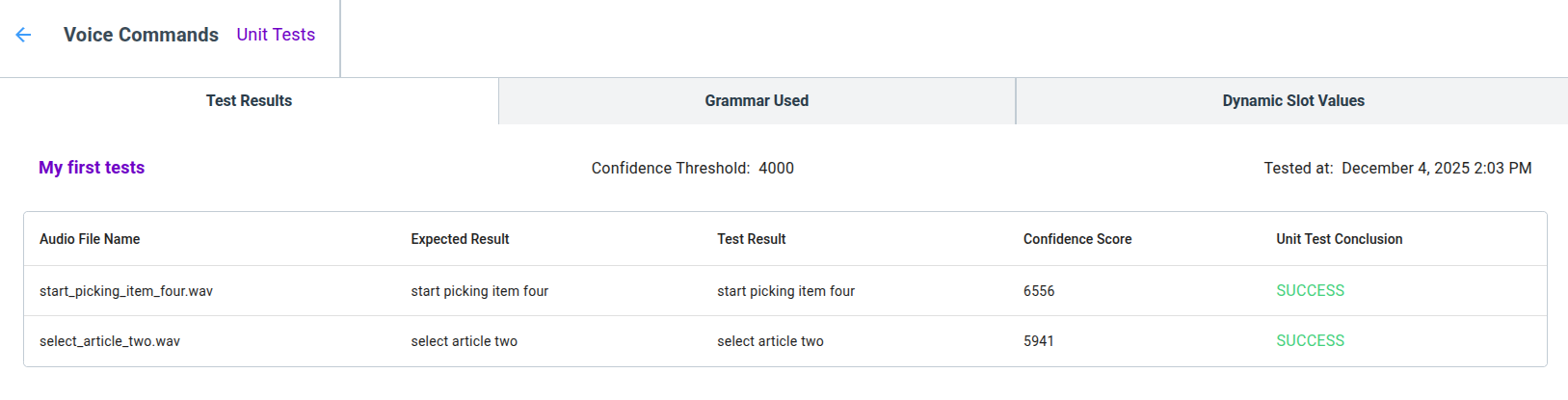

Below are the details of this tests to help you understand Unit Tests Results in depth:

Example of Unit Tests Results

The voice command recognizer returns the most probable result from the grammar options. This means it can only answer with commands defined in the grammar. Consequently, unit tests will strictly compare audio transcriptions to the recognized commands, including case and orthography.

This strict comparison can sometimes cause a test to fail for the wrong reason: the command may be recognized correctly, but a poorly written transcription can trigger a failure.

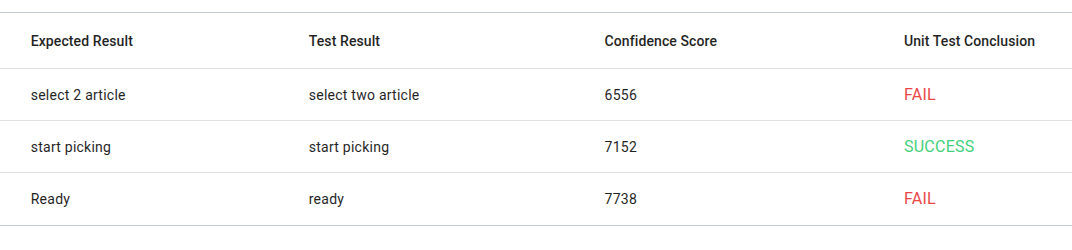

Let’s explore a few examples where the Unit Test fails but should pass.

Case 1: false negative

A false negative occurs when a transcription differs from the grammar, leading to a failed Unit Test even though the recognizer understood the command correctly.

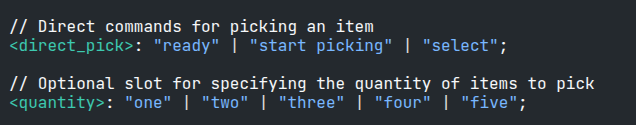

A glimpse of grammar

Example of false negatives unit tests results

In this example, the grammar options are “ready” and “two”. “Ready” or “2” aren’t valid.

You can fix this by changing the transcriptions, aligning the case and the orthography with the grammar.

Do not fix it by adding multiple grammar options that "sound" the same. For example, do not write "2" | "two" in your grammar just to pass the test.

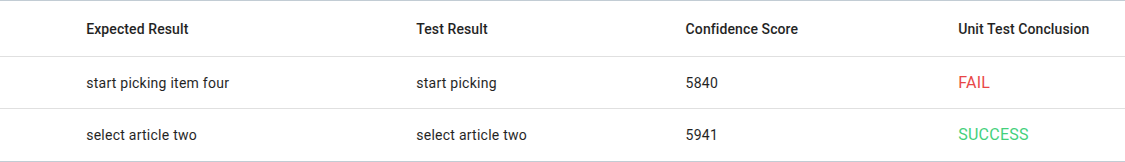

Case 2: grammar structure errors

#BNF+EM V2.1;

!grammar VoicePickGrammar;

!start <main>;

<main>: <direct_pick>;

<direct_pick>: "start picking" | "select" !optional(<itemtype> <itemnumber>);

<itemtype>: "article" | "object" | "item";

<itemnumber>: "one" | "two" | "three" | "four" | "five";Let’s assume this grammar tries to achieve those type of commands:

start picking item four

select article two

Why only start picking detected ?

Since it worked for “select article two,” the grammar seems to function as intended. At first glance, it seems the recognizer fails to understand “item four”. However, the root cause is a structural error in the grammar that the syntax parser cannot detect. This error prevents the grammar from being used as designed.

The error comes from this line:

<direct_pick>: "start picking" | "select" !optional(<itemtype> <itemnumber>);Like in logic, OR (the symbol |) follows the priority rule. This means the optional item type and number apply only to the “select” option, just as addition applies only to a factor in an equation.

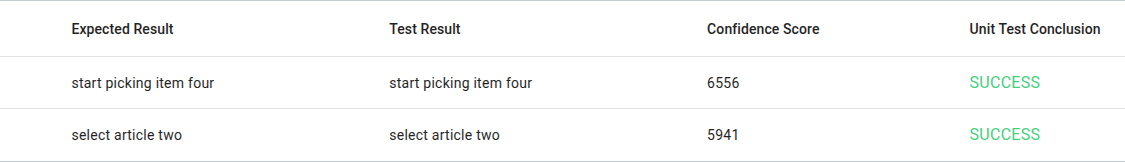

7 x 4 + 5 = 7 x 20 = 140 7 x 4 + 5 = 28 + 5 = 33In order to get our grammar work as intended, the rules must be separated like this :

<main>: <direct_pick> !optional(<itemtype> <itemnumber>);

<direct_pick>: "start picking" | "select";This way, the grammar applies the condionnal statement no matter what have been found in the <direct_pick> rule before.

It worked !

This example demonstrates that unit testing can uncover structural errors in grammars. Run multiple tests—even seemingly trivial ones—because small details can hide critical issues.