How-to: Create and use a Wake word

What is a Wake Word?

A Wake Word refers to a specific word or phrase that activates the ASR system to start listening and processing voice commands. This allows the ASR system to remain in a low-power or idle state when not in use, conserving energy and computational resources. Once the wake word is detected, the ASR system switches from passive listening to active listening, ready to process the subsequent spoken commands.

How to use it?

In this tutorial, we'll showcase how to create a wake word and use it to trigger our ASR engine to start listening and processing voice commands.

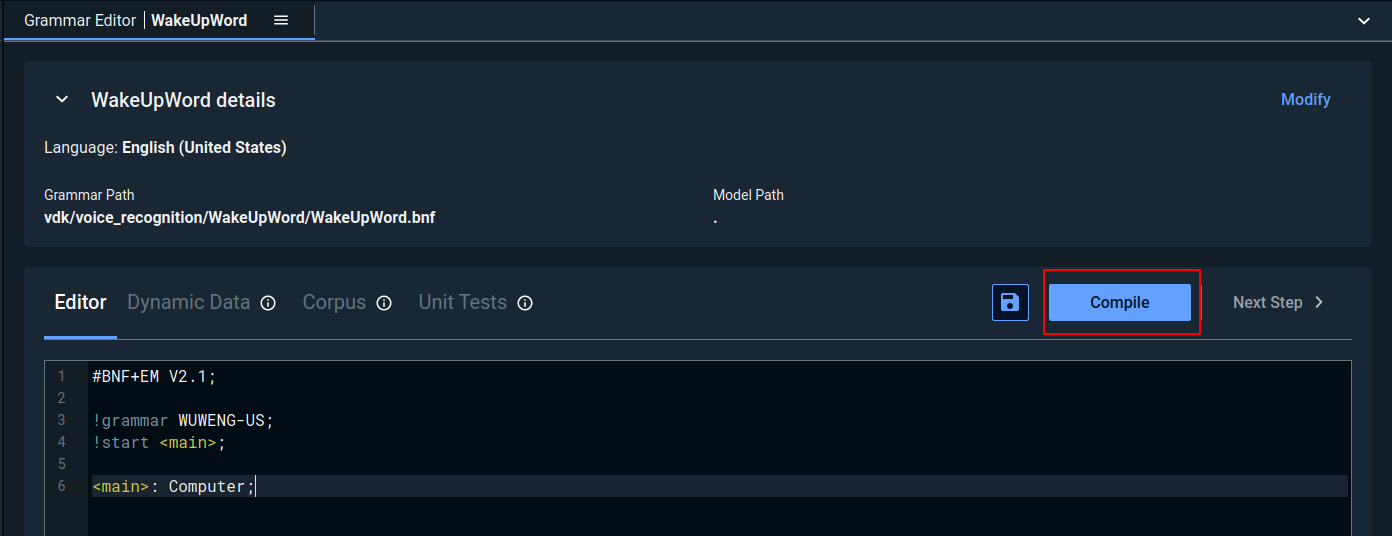

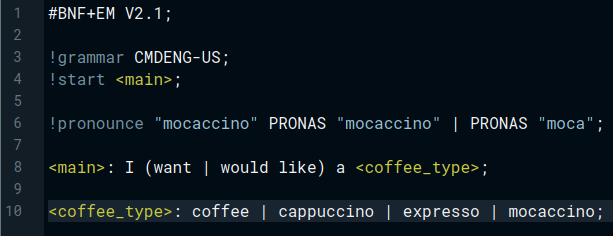

To create a Wake Word, we should define it first in a grammar that follows BNF-like notation. Please refer to this tutorial to better understand how to make grammar.

-20240531-124037.png?inst-v=26f3ed4e-b200-4c56-9737-0d438f1a56c7)

Note the use of the word "Computer" as our Wake Word.

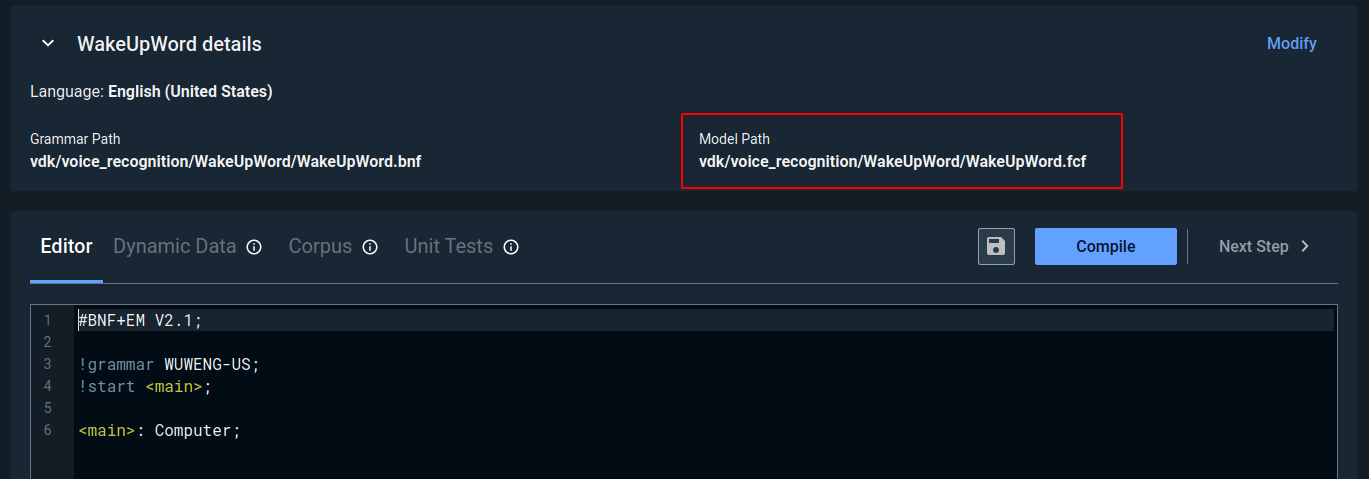

Now, we must compile our grammar to generate its binary version.

When the compilation process is finished, the binary version will be saved alongside the grammar version in the same directory.

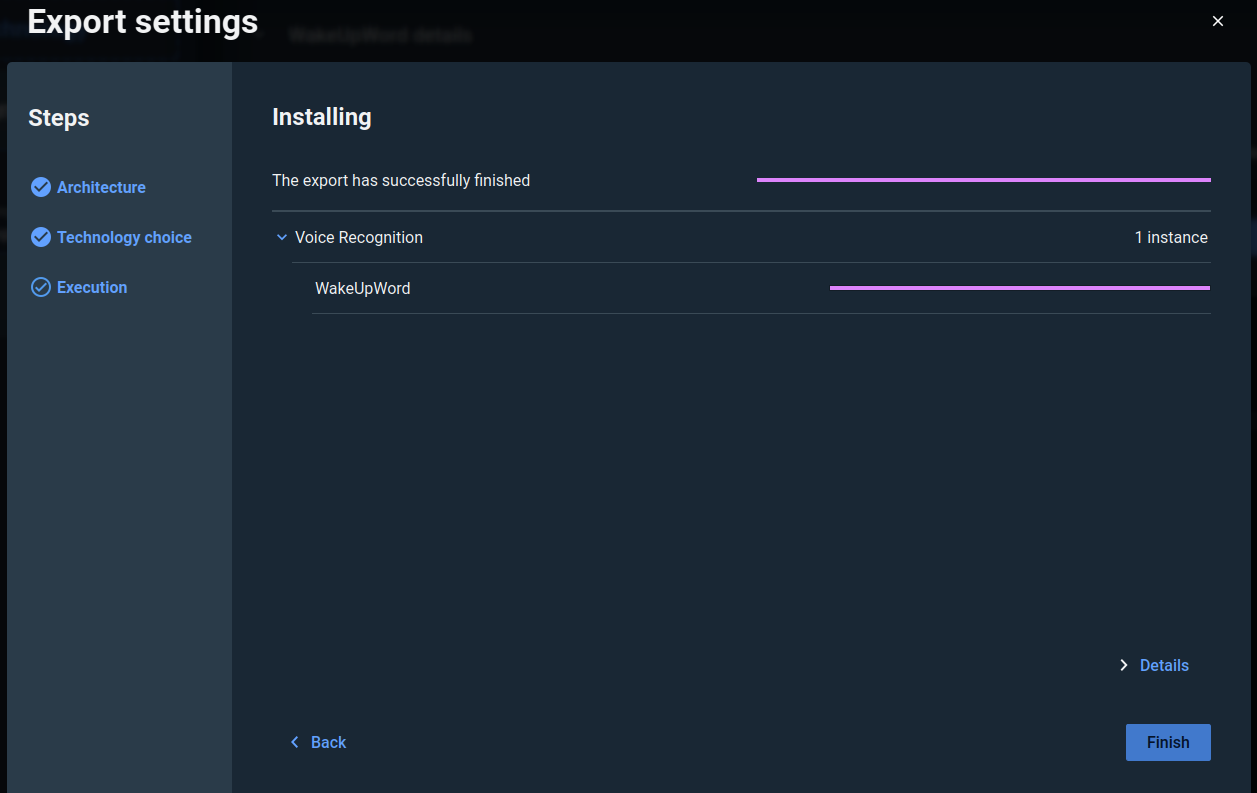

Finally, we can start exporting our project to generate the configuration files and data the engine needs. To do that click on the Export Project button and follow the instructions.

We can now start using our Wake Word model. Here is a code example that will allow us to bootstrap the ASR engine and set up the mic so we can say our Wake Word. Note that a high confidence threshold value is recommended to eliminate false positives since Wake Word is crucial compared to standard voice recognition tasks. We’ve used 5000 as a threshold, but you can adjust it according to your environment and use case. The program will run until the Wake Word is detected. Note that we’re using the vsdk-vasr SDK in this example. Please make sure to update your SDK according to your license permissions.

// VSDK includes

#include <vsdk/asr/vasr.hpp>

#include <vsdk/audio/producers/PaMicrophone.hpp>

#include <vsdk/utils/Misc.hpp>

#include <vsdk/utils/samples/EventLoop.hpp>

// C++ includes

#include <csignal>

using Vsdk::Asr::Engine;

using Vsdk::Asr::Recognizer;

using Vsdk::Utils::Samples::EventLoop;

static void onSignal(int s);

static void onAsrResult(Recognizer & recognizer, Recognizer::Result const & result);

static void installModel(std::string const & model, Recognizer & rec, int startTime);

namespace

{

constexpr auto confThreshold = 5000;

constexpr auto recognizerName = "rec";

constexpr auto wuwModel = "WakeUpWord";

} // !namespace

int main() try

{

// Event loop must be destroyed last

std::shared_ptr<void> const eventLoopGuard(nullptr, [] (auto) { EventLoop::destroy(); });

// Set up the ASR Engines with the path to the configuration file

auto const engine = Engine::make<Vsdk::Asr::Vasr::Engine>("config/vsdk.json");

auto rec = engine->recognizer(recognizerName);

rec->subscribe([&] (Recognizer::Result const & r) { onAsrResult(*rec, r); });

rec->setModel(wuwModel);

// Set up the input device

auto const mic = Vsdk::Audio::Producer::PaMicrophone::make();

// Set up the pipeline

Vsdk::Audio::Pipeline p;

p.setProducer(mic);

p.pushBackConsumer(rec);

EventLoop::instance().queue([&]

{

p.start();

fmt::print("Recognition started! Press CTRL+C to exit\n");

fmt::print("Say \"Computer\"\n");

});

EventLoop::instance().run(); // Block on run() and wait for jobs until explicit shutdown

return EXIT_SUCCESS;

}

catch (std::exception const & e)

{

fmt::print(stderr, "A fatal error occured:\n");

Vsdk::printExceptionStack(e);

return EXIT_FAILURE;

}

void onAsrResult(Recognizer & recognizer, Recognizer::Result const & result)

{

if (result.hypotheses.empty())

fmt::print("Result received with no hypothesis");

else

{

auto const & best = result.hypotheses[0]; // First is always the best

fmt::print("Hypothesis: '{}' (confidence: {})\n", best.text, best.confidence);

if (best.confidence >= confThreshold)

{

fmt::print("Tada! Congrats\n");

EventLoop::instance().shutdown();

return;

}

else

fmt::print("Try again, speak louder or clearer!\n");

}

installModel(wuwModel, recognizer, recognizer.upTime()); // -1 instead of upTime is ok too

}

void installModel(std::string const & model, Recognizer & recognizer, int startTime)

{

// The given lambda is passed to the EventLoop because calling setModel can't be done in

// the same thread as the recognition result callback, see Recognizer::subscribe()

EventLoop::instance().queue([model, startTime, &recognizer]

{

recognizer.setModel(model, startTime);

fmt::print("[{}] Model '{}' activated\n",

Vsdk::Utils::formatTimeMarker(std::chrono::milliseconds(startTime)), model);

});

}

void onSignal(int s)

{

std::signal(s, SIG_DFL);

fmt::print("\n{} received, stopping the program...\n", s == SIGINT ? "SIGINT" : "SIGTERM");

EventLoop::instance().shutdown(); // Unblock main thread once work is fully done

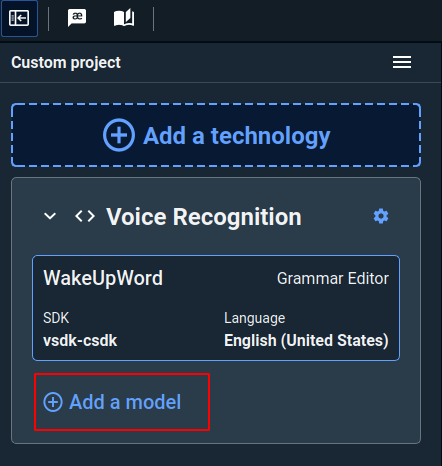

} The next step is adding our Command model by clicking the “Add a model” button.

The same steps used for the Wake Word model apply to the Command model. First, we should define our grammar, then compile it, and finally export the project.

Note that our Command model will let us order our favorite type of Coffee ☕.

At this point, our Wake Word model and our Command model are not linked. We must chain them to ensure our ASR engine reacts only when detecting the Wake Word.

The best way to achieve this is by first setting and installing the Wake Word model as we did in the first code example. Then, we must obtain the best hypothesis and check if its confidence level has reached a certain threshold. If so, we proceed by installing the Command model, starting at the end of the previous one.

We can achieve this by modifying the onAsrResult() callback behavior.

// ...

namespace

{

// ...

constexpr auto cmdModel = "CmdModel";

} // !namespace

// ...

void onAsrResult(Recognizer & recognizer, Recognizer::Result const & result)

{

if (result.hypotheses.empty())

fmt::print("Result received with no hypothesis");

else

{

auto const & best = result.hypotheses[0]; // First is always the best

fmt::print("Hypothesis: '{}' (confidence: {})\n", best.text, best.confidence);

if (best.confidence >= confThreshold)

return installModel(cmdModel, recognizer, best.endTime);

else

fmt::print("Try again, speak louder or clearer!\n");

}

installModel(wuwModel, recognizer, recognizer.upTime()); // -1 instead of upTime is ok too

}So rather than returning a message to the user when the Wake Word is detected and finishing the program, We will install the new Command model instead.

Please refer to our chained-grammars sample for a fully detailed example.

Hopefully, you found this useful. Happy learning!