Frequently Asked Questions - FAQ

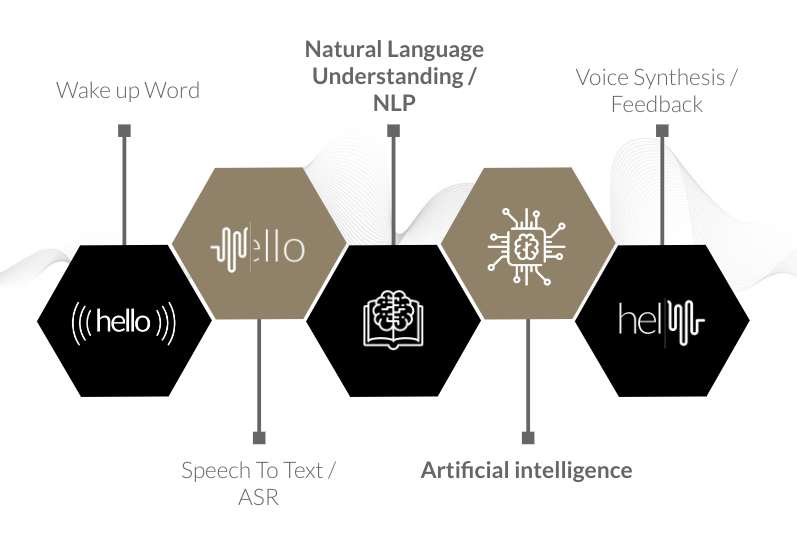

A voice assistant is composed of 5 parts.

Wake-up Word (WuW)

Mainly used for Cloud recognition, the WuW allow a device to listen continously a microphone without sending data online nor wasting CPU calculation.

A good WuW is composed of at least 3 syllables, cut on consonants and based on non common words.

As an extra, it should sound like the same in every language you want to adress and you can use it as branding for your product.Speech to Text (STT)

This module transcribe audio frequencies from voice to text. There is 2 family of STT that can be resumed as:FreeSpeech: This system can transcribe a large vocabulary that need to be analysed by a NLP module.

Grammar based: This system will only transcribe the vocabulary it was setup for.

Natural Language Understanding (NLU)

Natural-language understanding (NLU) is the comprehension by computers of the structure and meaning of human language (e.g., English, Spanish, Japanese), allowing users to interact with the computer using natural sentences.Artificial Intelligence (AI)

This one is a melting pot of features in charges of executing the request of the user by calling API, editing database or anything else.Voice Synthesis

As a result for a voice command, a feedback should be given to the user. It can be a synthetized voice generate by a text to speech module.

Several samples are available. You can choose the one corresponding to your usecase (ASR, TTS, ASR+TTS,...) and build it to discover how to use our SDK.

These samples are pretty easy to understand and the integration in an already existing project should not be a problem.

We rely on Conan to fetch the dependencies and CMake to build. All of our samples contain the required files to do it.

We support Speech Synthesis Markup Language (SSML) tagging to customize the flow of the speech with any voices.

Yes, our voice synthesis technology supports the Speech Synthesis Markup Language (SSML) which allows a standard way to control aspects of speech such as pronunciation, volume, pitch, rate, etc. across different voices.

You can check this page Speech Synthesis Markup Language (SSML) for the list of supported markups.

You have two ways to use multi-languages using the voice synthesis technology:

Choosing a multi-language voice which supports both german and english i.e Anna-ml or Petra-ml and use the SSML markup lang to select the language of your choice.

XMLEnglish, <lang xml:lang="de">Deutsch</lang>, English.Switch between voices when you want to say a word in different language using the voice SSML markup.

XMLEnglish, <voice xml:lang="de">Deutsch</voice>, English

You can check SDK specifics for Voice Synthesis page for more details about the supported features by each SDK.

In case you want to pronounce a word differently you can use the sub or the phoneme SSML markup.

The sub markup is used to substitute text for the purposes of pronunciation.

<sub alias="Voice Development Kit">VDK</sub>The phoneme markup is used to provide a phonetic pronunciation for the contained text.

<phoneme alphabet="ipa" ph="vivo͡ʊkə">Vivoka</phonemYou can check Speech Synthesis Markup Language (SSML) page for more details on how to use the sub and phoneme markups.

Our solution can run on a Raspberry Pi. We use both Raspberry Pi 3b+ and 4 (32 and 64bits) on our side as test devices.